I’m experimenting with automated testing for Firefox and figured it may be useful to record what I learned. I had a look at the Mochitest page on MDN as well as the main page on Automated testing at Mozilla. It is hard to know how to even begin to explain this. Mochitests are a huge ball of tests for Firefox. They run every time a change is pushed to mozilla-central, which is the sort of tip of the current state of our code and is used every day to build the Nightly version of Firefox. They’re run automatically for changes on other code repositories too. And, you can run them locally on your own version of Firefox.

This is going to have ridiculous levels of detail and jargon. Warning!

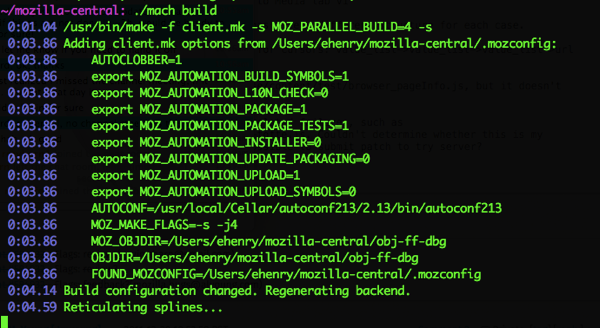

The first thing to do is to download the current code from mozilla-central and build it on my laptop. Here are the Firefox build instructions!

As usual I need to do several other things before I can do those things. This means hours of twiddling around on the command line, installing things, trying different configurations, fixing directory permissions and so on. Here are a few of the sometimes non-trivial things I ended up doing:

* updated Xcode and command line tools

* ran brew doctor and brew update, fixed all errors with much help from Stack Overflow, ended up doing a hard reset of brew

* Also, if you need to install a specific version of a utility, for example, autoconf: brew tap homebrew/versions; brew install autoconf213

* re-installed mercurial and git since they were screwed up somehow from a move from one Mac to another

* tried two different sample .mozconfig files, read through other Mac build config files, several layers deep (very confusing)

* updating my Firefox mozilla-central directory (hg pull -u)

* filed a bug for a build error and fixed some minor points on MDN

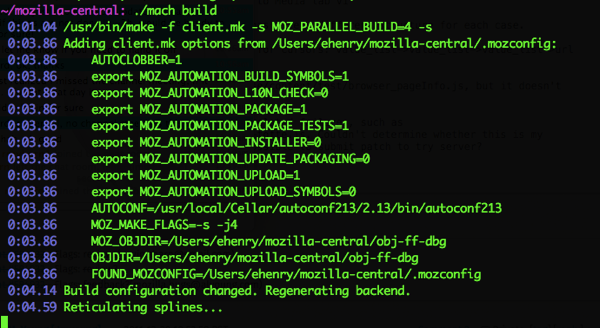

The build takes around an hour the first time. After that, pulling the changes from mozilla-central and reticulating the splines takes much less time.

Now I’m to the point where I can have a little routine every morning:

* brew doctor

* brew upgrade

* cd mozilla-central, hg pull -u

* ./mach build

Then I’m set up to run tests. Running all the mochitest-plain tests takes a long time. Running a single test may fail because it has dependencies on other tests it expects to have run first. You can also run all the tests in a particular directory, which may work out better than single tests.

Here is an example of running a single test.

./mach mochitest-browser browser/base/content/test/general/browser_aboutHome.js

Your Nightly or Nightly-debug browser will open and run through some tests. There will be a ton of output. Nifty.

Here is that same test, run with e10s enabled.

./mach mochitest-browser —e10s browser/base/content/test/general/browser_aboutHome.js

BTW if you add “2>&1 | tee -a test.log” to those commands they will pipe the output into a log file.

Back to testing. I poked around to see if I could find a super easy to understand test. The first few, I read through the test code, the associated bugs, and some other stuff. A bit overwhelming. My coworker Juan and I then talked to Joel Maher who walked us through some of the details of how mochitests work and are organized. The tests are scattered throughout the “tree” of directories in the code repository. It is useful generally to use DXR to search but I also ended up just bouncing around and getting familiar with some of the structure of where things are. For example, scarily, I now know my way to testing/mochitest/tests/SimpleTest/. Just by trying different things and looking around you start to get familiar.

Meanwhile, my goal was still to find something easy enough to grasp in an afternoon and run through as much of the process to fix a simple bug as I could manage. I looked around for tests that are known not to work under e10s, and are marked in manifest files that they should be skipped if you’re testing with e10s on. I tried turning some of these tests on and off and reading through their bugs.

Also meanwhile I asked for commit access level 1 (for the try server) so when I start changing and fixing things I can at least throw them at a remote test server as well as my own local environment’s tests.

Then, hurray, Joel lobbed me a very easy test bug.

From reading his description and looking at the html file it links to, I got that I could try this test, but it might not fail. The failure was caused by god knows what other test. Here is how to try it on its own:

./mach mochitest-chrome browser/devtools/webide/test/test_zoom.html

I could see the test open up Nightly-debug and then try to zoom in and out. Joel had also described how the test opens a window, zooms, closes the window, then opens another window, zooms, but doesn’t close. I have not really looked at any JavaScript for several years and it was never my bag. But I can fake it. Hurray, this really is the simplest possible example. Not like the 600-line things I was ending up in at random. Danny looked at the test with me and walked through the JavaScript a bit. If you look at the test file, it is first loading up the SimpleTest harness and some other stuff. I did not really read through the other stuff. *handwave* Then in the main script, it says that when the window is loaded, first off wait till we really know SimpleTest is done because the script tells us so (Maybe in opposition to something like timing out.) Then a function opens the WebIDE interface, and (this is the main bit Danny explained) the viewer= winQuerInterface etc. bit is an object that shows you the state of the viewer. *more handwaving, I do not need to know* More handwaving about “yield” but I get the idea it is giving control over partly to the test window and partly keeping it. Then it zooms in a few times, then this bit is actually the meat of the test:

is(roundZoom, 0.6, "Reach min zoom");

Which is calling the “is” function in SimpleTest.js, Which I had already been reading, and so going back to it to think about what “is” was doing was useful. Way back several paragraphs ago I mentioned looking in testing/mochitest/tests/SimpleTest/. That is where this function lives. I also felt I did not entirely need to know the details of what the min and max zoom should be. Then, we close the window. Then open a new one. Now we see the other point of this test. We are checking to see that when you open a WebIDE window, then zoom to some zoomy state, then close the window, then re-open it, it should stay zoomed in or out to the state you left it in.

OK, now at this point I need to generate a patch with my tiny one line change. I went back to MDN to check how to do this in whatever way is Mozilla style. Ended up at How to Submit a Patch, then at How can I generate a patch for somebody else to check in for me?, and then messing about with mq which is I guess like Quilt. (Quilt is a nice name, but, welcome to hell.) I ended up feeling somewhat unnerved by mq and unsure of what it was doing. mq, or qnew, did not offer me a way to put a commit message onto my patch. After a lot of googling… not sure where I even found the answer to this, but after popping and then re-pushing and flailing some more, and my boyfriend watching over my shoulder and screeching “You’re going to RUIN IT ALL” (and desperately quoting Kent Beck at me) as I threatened to hand-edit the patch file, here is how I added a commit message:

hg qrefresh -m "Bug 1116802: closes the WebIDE a second time"

Should I keep using mq? Why add another layer into mercurial? Worth it?

To make sure my patch didn’t cause something shocking to happen (It was just one line and very simple, but, famous last words….) I ran the chrome tests that were in the same directory as my buggy test. (Piping the output into a log file and then looked for anything that mentioned test_zoom. ) The test output is a study in itself but not my focus right now as long as nothing says FAIL.

./mach mochitest-chrome browser/devtools/webide/test/

Then I exported the patch still using mq commands which I cannot feel entirely sure of.

hg export qtip > ~/bug-1116802-fix.patch

That looked fine and, yay, had my commit message. I attached it to the bug and asked Joel to have a look.

I still don’t have a way to make the test fail but it seems logical you would want to close the window.

That is a lot of setup to get to the point where I could make a useful one line change in a file! I feel very satisfied that I got to that point.

Only 30,000 more tests to go, many of which are probably out of date. As I contemplate the giant mass of tests I wonder how many of them are useful and what the maintenance cost is and how to ever keep up or straighten them out. It’s very interesting!

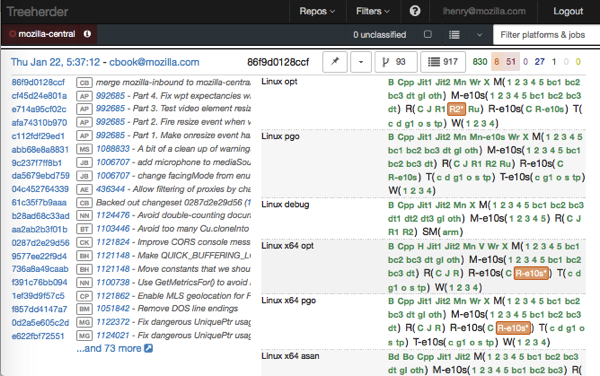

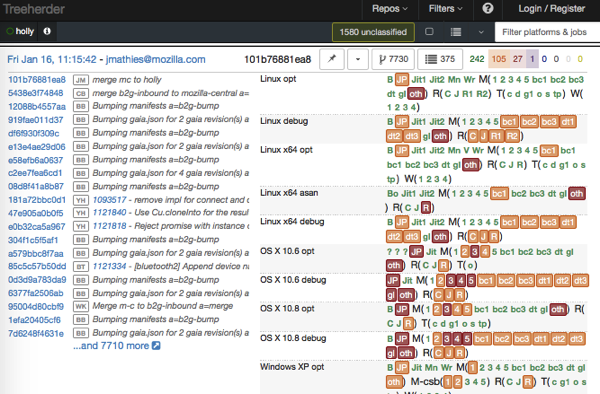

You can have a look at the complicated nature of the automated tests that run constantly to test Firefox at treeherder. For any batch of commits merged into mozilla-central, huge numbers of tests run on many different platforms. If you look at treeherder you can see a little (hopefully green) “dt” among the tests. I think that the zooming in WebIDE test that I just described is in the dt batch of tests (but I am not sure yet).

I hope describing the process of learning about this small part of Firefox’s test framework is useful to someone! I have always felt that I missed out by not having a college level background in CS or deep expertise in any particular language. And yet I have still been a developer on and off for the last 20 years and can jump back into the pool and figure stuff out. No genius badge necessary. I hope you can see that actually writing code is only one part of working in this kind of huge, collaborative environment. The main skill you need (like I keep saying) is the ability not to freak out about what you don’t know, and keep on playing around, while reading and learning and talking with people.