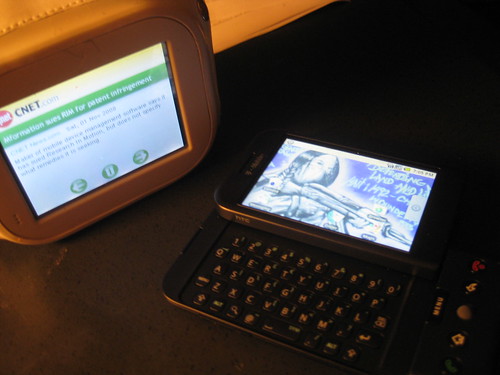

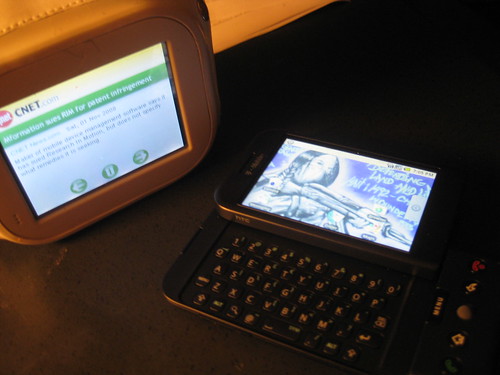

Look, my G1 made friends with my Chumby!

So far I’m very happy with the G1. It has a great feel, it’s easy to type on, its phone and net coverage is great so far in the SF Bay Area, and every day people are posting new new apps for it. If you are a sanfransocial chronic fidgeter like me you’ll be snicking this thing open & shut all day, because of its pleasant slide-y feeling. And because you will be all like OMG I HAVE THE MOTHERF*CKING INTERNET IN THIS BORING ELEVATOR.

The first thing I did was to try out everything on the phone. My contact list, which is a giant mess, is in there from my old Razor’s sim card. Seriously, it’s a huge mess. The few people that I call all the time, I starred to move them over to the “Favorites” tab in the Dialer. The phone dialer is nice; I like dialing on the touch screen rather than with the numbers. The other thing about Contacts/Dialer that I like is the long, detailed call log. It took me a few minutes to figure out how to add new numbers from the call log to my contacts list: click and hold on a log entry, which pulls up a new menu including one to save to contacts.

I find it a little perturbing that I can’t tell if I’m closing an app, or just backgrounding it. Is that stuff all still running in the background?!

In the browser, I logged into my gmail account. Suddenly a wealth of data is there for me, since I’m a heavy Google user. The top bar slides down window-shade style to show new email, messages, calls, or other notifications.

Maps – Fabulous. Try street view, then the compass.

Market – Works great for me so far. Fun to browse. I like the comments/reviews on each app, especially how they are often frivolous and rude.

Amazon MP3 – addictive, good browsing, works beautifully. Buy mp3s, move them over to your computer or server with no problem at all. I already love Amazon MP3 for its lack of DRM and its great selection especially in Latin music.

Browser – Okay, but I have a little trouble zooming, clicking, entering data into tiny boxes. I think it takes practice with the tiny trackball.

Calendar – Scary good if you use Google Calendar already. I have 4 calendars going at once. So far I find week view the most useful – there’s no text on it but you can tap a colored block for a quick popup that gives details.

Gmail – okay, but I wish I could delete

Camera – still playing around with it. Works very well in low light! Seems a bit slow, but autofocus works well.

Music – Slick!

Pictures – Works just fine

Youtube- haven’t tried it yet

Voice Dialer – Works! neat! Will I actually use it? No.

I stuck some photos on it and changed my desktop to a photo from my Flickr account. Therer’s a Flickr group with 640 x 480 photos to use for wallpaper, and you can find more wallpaper on the various community forums. Moving from the left and rights sides of the desktop to the middle & back is strangely addictive and beautiful. I tried for a while to move apps by flinging them, or holding them and scrolling, but couldn’t get that to work. INstead, if I want to move something from the center screen to the left or right, I click and hold, drag it to the trash; then go to the side screen, click and hold on the background, and add the alias to that app or shortcut.

After a while I looked at App reviews and “tips and tricks” on some of the forums: Android Forums, Tmobile G1 forum, androidcommunity.com.

When you plug in the G1 to a Mac with a USB cable, you will see just 3 folders: albumthumbs, dcim, and Music. Poke around in there and see what you can see. Drag some music over to the G1’s Music folder and it shows up in your Music app.

When you are browsing, click and hold on a photo to download it. Not sure if this works with pdfs, movies, or sound files – I haven’t tried yet. When you download something from the browser, a new folder is created that you can see when you poke around with a USB connection. Same for amazonmp3 and BlueBrush – they create folders you can access through a USB and your computer’s filesystem.

At some point I downloaded some apps and messed around with them. when I first got this beast early this week, there was barely anything except like 500 different tip calculators. Hello dorkwads, take the tax, double it and add a dollar or two, you don’t need to whip out your $300 phone to calculate the tip … OR DO YOU…

Amazed – a simple marble-in-a-maze game.

AnyCut – looks useful to make shortcuts.

Barcode Scanner – Interesting! But has way more potential

Bluebrush – I have not really explored it but it looks like a collaborative whiteboard drawing app. I would not call its menus or icons intuitive… Flailed a while then left.

Cab4me light – Great potential! I need this! Needs more cab companies, data, a button to turn the GPS on and off.

ConnectBot – YAY I can ssh from my phone! This makes me happy. If I could ssh into my phone as well, maybe use scp, wouldn’t that be nice?

Es Musica – Tried this for fun. Hey, bikini boxing!

iSkoot – Skype for the G1!

Langtolang dictionary. Simple translation dictionary for several languages.

Shazam – This is good. It listens & samples a few seconds of whatever music you’re listening to and identifies it. I tried it with a range of music. It had great accuracy.

Strobe Light – this is really great if you’re a total asshole. Of course I downloaded it.

WikiMobile – How handy. Will never have another restaurant argument again. Am already a know it all trivia-hole, and now I can prove it on the spot. I haven’t tried the other Wikipedia app yet. How do the different versions compare?

Here’s what I’d like to have on my G1:

* password/keychain manager. What a pain in the ass entering all this stuff.

* a plain old compass app, unrelated to Street View.

* Ecto, or some equivalent, so I can post quickly to any of my 24812469 blogs, which are not all on Google/Blogger/Blogspot.

* Photo uploaders. Better extensions to send photos out very quickly to Flickr, my various blogs, Twitpic, or whatever. Hot buttons, so that I can snap the photo, and hit a single on-screen button to go “send to X” rather than pull up a menu, connect with gmail, start typing, and send to my Flickr email. It should be seamless, so I can take another photo or act like a human being instead of a little gnome fiddling with my magic box all day long.

* G1-Thing, to hook up one of the barcode scanners with LibraryThing.

* Inventory functions. More “barcode scanning a list of objects” functions. When I scan a bar code I don’t necessarily want to look it up on google or amazon or ShopWhatever. I might want to just add it to a list of Junk in my Trunk. I could see people scanning their CDs or books or DVDs here. Or hooking in the list of stuff in their pantry to somewhere like FoodProof, to figure out what they could cook without having to shop.

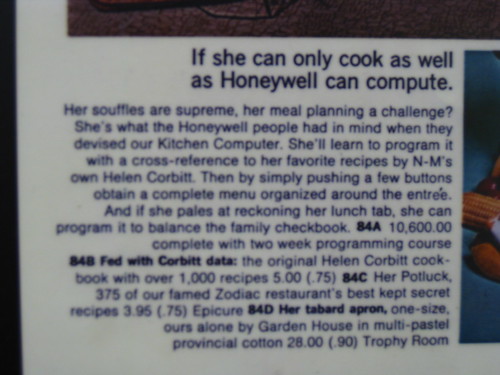

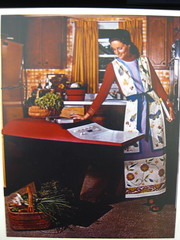

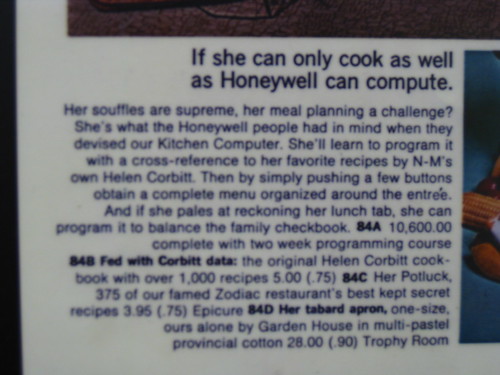

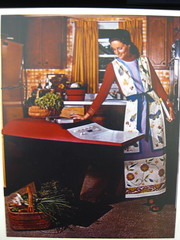

AAAAAA! Did I just mention computers and recipes in the same breath? Maybe I should go back in time and buy a Honeywell Kitchen Computer!

* GPS. I would really, really love some cool GPS functions. I love the satellite tracker/detector screen on my old (borrowed) Garmin eTrex. I would just turn that thing on and stare at it for 10 minutes to see how many satellites would pop up. I’d like to know what satellites so I can look them up online. Better yet pop me up some info and tell me all about it. Holy crap! SATELLITES are flying around over us in SPACE. That never ceases to be cool.

Some geocaching apps that hook in to geocaching.com would rock.

* Tide tables. I have no reason to care, except that when I’m driving up to the city, if it happens to be low tide or a super low neap tide I might swing by the beach to poke some anemones and harass a hermit crab or two. If I were still surfing, a surf report app might be nice.

* Nethack. The real kind not the graphic version please!

* Auto rotate. Last but not least. I wish that the screen view would rotate when I turn the phone, not just when I open the keyboard! Or is that a setting already, and I’ve missed it?